Google Lens transforms how users interact with visual information. This powerful tool allows people to search and learn about their surroundings using their smartphone camera. Google Lens history provides a record of past searches, enabling users to revisit and learn from their visual queries.

Users can access their Google Lens history through the Google Photos app or the My Activity page. This feature helps people track their visual searches and discover new information about objects, places, and text they’ve encountered. Google stores this data to improve the service and personalize results.

Privacy-conscious users have options to manage their Google Lens history. They can delete individual searches, clear their entire history, or set up auto-delete options. These controls give users power over their data while still benefiting from the convenience of visual search technology.

Recent Developments in Google Lens Technology

Since its launch, Google Lens has transformed from a simple image recognition tool into a robust AI-powered platform for visual understanding. In 2024 and early 2025, the pace of innovation around Google Lens has accelerated, with several major updates that not only refine its core functionality but also expand its reach into new realms like video search, e-commerce, and wearables. These changes mark a significant leap forward in how we interact with the world through our devices.

Video and Multimodal Search Take the Spotlight

One of the most groundbreaking updates to Google Lens is its new support for video-based search. Instead of relying solely on static images, users can now capture short video clips while asking a question—combining motion, context, and spoken language. This multimodal approach allows Lens to understand far more complex queries.

Imagine recording a clip of your malfunctioning washing machine while asking, “Why is this making that noise?” or pointing to a plant in your garden and asking, “Is this overwatered?” Lens now analyzes both the video’s content and your voice input, offering tailored, context-rich results. It’s a game-changer for troubleshooting, DIY tasks, and real-world problem-solving where a still image just isn’t enough.

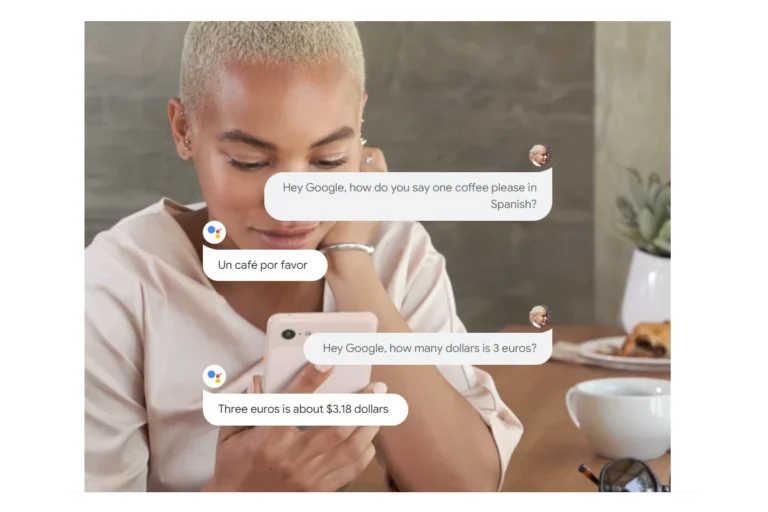

Voice Input + Vision = Smarter Interactions

This upgrade isn’t just about video. Lens has evolved to become a more conversational tool. You can talk to it like you would a human assistant. By integrating natural language understanding with real-time visual analysis, Lens can now process questions like, “Where can I buy this?” or “What’s the nutritional value of this meal?” as you scan with your camera. It’s a more fluid, human experience—one that feels less like searching and more like discovering.

Shopping with Lens Is Faster and Smarter

Google has quietly turned Lens into a visual shopping engine, and in 2024 it got much better at it. When you scan a product—whether it’s a handbag in a boutique window or a pair of sneakers in someone’s Instagram photo—Lens doesn’t just identify it. It pulls up real-time listings from online stores, compares prices across retailers, displays customer reviews, and even shows availability near you. This feature turns your phone into a personal shopping assistant, bridging the gap between inspiration and purchase in seconds.

iPhone Users Finally Get Full Access

Until recently, Google Lens on iOS lagged behind its Android counterpart. That changed with a major update in early 2025. Now integrated directly into the Chrome and Google apps for iPhone, Lens allows users to tap a single button to draw, highlight, or select anything on the screen to start a search. No more taking screenshots or switching apps. Whether it’s text, an object, or a product, Lens can identify and analyze it instantly—on the same screen you’re already on.

Smart Glasses Bring Lens Into the Real World

Lens is no longer limited to phones and tablets. In a major step forward for augmented reality, Google recently introduced AI-powered smart glasses that incorporate Lens-like functionality. These glasses can identify landmarks, translate signs, read menus aloud, and display contextual information in real time—all without needing to take out your phone.

Powered by Google’s Gemini assistant, the smart glasses offer voice-driven controls and visual overlays, blending Lens’s visual intelligence with hands-free convenience. Whether you’re exploring a foreign city, attending a conference, or fixing a device, this integration of AI and AR feels like a sneak peek into the future of ambient computing.

The Road Ahead for Visual Search

Google Lens is no longer just about identifying things—it’s about understanding the world in context. The combination of computer vision, AI-powered language models, and real-time data is turning our cameras into intelligent assistants capable of making sense of what we see. The platform’s evolution from still image recognition to immersive, multimodal interactions suggests we’re entering a new era of search—one where asking a question doesn’t require typing at all.

As Google continues investing in Lens, expect more integrations across devices, more intelligent context-aware features, and deeper personalization. Visual search isn’t just an add-on anymore—it’s becoming the foundation of how we interact with digital information in the physical world.

Key Takeaways

- Google Lens history records visual searches for future reference

- Users can access their history through Google Photos or My Activity

- Privacy settings allow users to manage or delete their Google Lens data

Evolution of Google Lens

Google Lens has undergone significant changes since its introduction. The image recognition technology has expanded its capabilities and integration across various platforms.

Initial Launch and Development

Google announced Lens at Google I/O 2017. The technology aimed to bring up relevant information about objects it identified using visual analysis. Google Lens initially relied on a neural network to process images and provide useful data to users.

The standalone app marked the beginning of Google’s journey into advanced image recognition. Early features included identifying plants, animals, and landmarks. Users could also scan business cards to add contact information to their phones.

Google continuously improved Lens’s accuracy and expanded its object recognition abilities. The company added features like real-time translation and text recognition in images.

Integration with Google Products

Google integrated Lens into several of its existing products to enhance user experience. The technology became a part of Google Photos, allowing users to analyze images in their library.

In Google Photos, Lens enabled users to extract text from images, identify objects, and get more information about places in their photos. This integration made it easier for users to organize and search their photo collections.

Google also added Lens to its main search app. This move allowed users to access visual search capabilities directly from their device’s home screen. The integration streamlined the process of using Lens for quick visual queries.

Expansion to Android and iOS

Google Lens expanded its reach by becoming available on both Android and iOS devices. On Android, Google integrated Lens into the camera app on many devices. This allowed users to access Lens features while taking photos.

For iOS users, Google added Lens to the Google app. This expansion brought visual search capabilities to iPhone and iPad users. iOS integration included features like scanning QR codes, translating text, and identifying objects.

Google continued to update Lens on both platforms. New features included the ability to solve math problems, identify plants and animals, and provide shopping recommendations based on clothing and accessories in images.

Features and Capabilities

Google Lens combines powerful image recognition with search capabilities to offer users a range of practical tools. Its features span visual search, text interaction, and shopping assistance.

Real-Time Recognition

Google Lens uses artificial intelligence to identify objects, plants, animals, and landmarks in real-time through a smartphone camera. Users can point their device at an item to get instant information. The app recognizes common objects like furniture, clothing, and electronics, providing details and search results.

For nature enthusiasts, Lens can identify plant species and animals. It also recognizes famous landmarks, offering historical information and facts about buildings and monuments.

Lens integrates with other Google services. Users can add events to their calendar by scanning flyers or save contact information from business cards.

Text Interaction and Translation

Lens excels at text recognition and interaction. It can extract text from images, making handwritten notes or printed documents searchable and editable.

The translation feature is particularly useful for travelers. Lens can translate text in real-time from over 100 languages. Users simply point their camera at foreign text to see an overlay with the translation.

Students benefit from the homework helper function. By scanning math problems or complex diagrams, Lens provides step-by-step solutions and explanations.

Shopping and Exploration

Google Lens transforms the shopping experience with its visual search capabilities. Users can find similar items to those they see in the real world or in images.

The “Circle to Search” feature lets users circle specific items in photos to find matching products online. This works for clothing, furniture, accessories, and more.

Lens helps users explore their surroundings. By scanning restaurant menus, it can show photos of dishes and reviews. It also identifies products in store displays, providing prices and availability from various retailers.

User Interaction with Google Lens

Google Lens allows users to interact with the world through their smartphone cameras. The tool provides visual search capabilities and image recognition features for various purposes.

Managing Google Lens Activity

Users can control their Google Lens activity through their Google Account settings. To view or delete Lens searches, they can visit myactivity.google.com and navigate to the Lens page. This page displays a chronological list of Lens searches, including dates and times.

Google offers options to delete individual searches or entire search history. Users can also set up automatic deletion of their Lens activity after a specified time period. For those who prefer not to save Lens searches, Google provides an option to turn off Web & App Activity in Account settings.

The visual search history feature in Google Lens helps users revisit past searches easily. This can be useful for finding information on previously identified objects, plants, or landmarks. Users can enable or disable this feature based on their privacy preferences and search needs.

Privacy Considerations

Google Lens’ visual search capabilities raise important privacy concerns. Users should be aware of how their data is handled and have control over their search history.

Control Over Data

Google offers users options to manage their visual search data. In the Google app, users can access their account settings to control Web & App Activity. This setting includes a checkbox to enable or disable visual search history. By default, this feature may be turned off to protect user privacy.

Users can choose to include visual search history if they want to revisit past discoveries. This option allows for a more personalized experience but requires sharing more data with Google. The decision to enable this feature should be based on individual comfort levels with data collection.

Deleting Activity Information

Google provides tools for users to view and delete their Lens activity. The My Activity hub is the central location for managing this data. Users can sign in to their Google Account and navigate to the Activity controls section.

In the See and delete activity area, users can find a Google Lens icon. Clicking this icon displays a list of past Lens searches. Users have the ability to delete individual items or their entire Lens history. This feature gives users control over their digital footprint and allows them to remove sensitive or unwanted search data.

Regular review and deletion of Lens activity can help maintain privacy. Users should consider setting reminders to check and clean their search history periodically.

Frequently Asked Questions

Google Lens offers various options for managing your visual search history. Users can view, delete, and adjust settings across different devices.

How can I view my Google Lens history on iPhone?

Open the Google app on your iPhone. Tap your profile picture, then select “Search history.” Look for the Google Lens icon to access your visual search history.

What are the steps to delete my Google Lens history?

Go to Google’s My Activity page. Sign in to your account. Click on “Delete activity by” and choose “Google Lens” from the options. Select the time range and confirm deletion.

How do I change my Google Lens history settings?

Visit the Google Account Activity Controls page. Find the “Web & App Activity” section. Toggle the switch next to “Include Chrome history and activity from sites, apps, and devices that use Google services.”

Is there a way to access Google Lens history on Android devices?

Yes. Open the Google app on your Android device. Tap your profile picture and select “Search history.” Look for the Google Lens icon to view your visual search history.

Can Google Lens save the images it scans?

Google Lens can save images temporarily for processing. The app doesn’t permanently store scanned images unless you choose to save them manually.

What is the process to save an image identified by Google Lens?

After scanning an image with Google Lens, tap the image result you want to save. Look for a “Save” or “Download” option. Tap it to save the image to your device’s gallery or a designated folder.