Crafting messages for AI systems has become an essential skill in today’s tech landscape. When working with generative AI tools, the quality of your input directly affects what you get back. Learning how to write effective prompts is the single most important factor in getting useful, accurate results from AI systems.

AI prompting is more than just asking questions. It involves providing context, examples, and clear instructions to guide the AI toward producing exactly what you need. Prompt engineering has emerged as a distinct skill that combines creativity with technical understanding to achieve optimal results from generative AI models.

The art of prompting requires understanding how these systems interpret information. Different types of prompts serve different purposes—from simple instructions to complex multi-part requests with few-shot examples that teach the AI how to respond. With practice, anyone can learn to communicate effectively with AI and unlock its full potential.

How to Use AI Prompting

Generative AI has revolutionized how we create — from writing and coding to designing and brainstorming. But the secret to getting the best results from tools like ChatGPT, Claude, Gemini, or DALL·E lies in prompting — the art of crafting effective instructions for AI.

In this guide, you’ll learn what AI prompting is, why it matters, and how to use it effectively to get accurate, creative, and high-quality results from generative AI models.

🧠 What Is AI Prompting?

AI prompting is the process of writing structured instructions (called prompts) that guide a generative AI model to produce a desired output.

A prompt can be as simple as a question —

“Write a poem about the ocean.”

Or as complex as a multi-step instruction —

“Act as a travel planner and create a 5-day itinerary for Tokyo, including local food recommendations and cultural experiences.”

The better your prompt, the better your results.

(source: IBM Prompt Engineering Guide, Learn Prompting)

⚙️ How Generative AI Uses Prompts

Generative AI models like GPT, Claude, and Gemini are trained on massive datasets of text, images, and code. When you give them a prompt, they analyze it for context, intent, and structure — then generate a response that statistically fits your request.

Think of prompting as a conversation:

- You tell the AI what you want.

- The AI interprets your request based on patterns it has learned.

- You refine your prompt until you get the output you need.

🪄 Types of AI Prompts

There are several types of prompts you can use depending on your goal:

| Type | Example | Use Case |

|---|---|---|

| Instructional Prompt | “Summarize this article in three bullet points.” | Quick tasks and summaries |

| Creative Prompt | “Write a sci-fi story about a robot learning emotions.” | Storytelling, brainstorming |

| Analytical Prompt | “Compare the marketing strategies of Apple and Samsung.” | Reports, essays, analysis |

| Role-based Prompt | “Act as a financial advisor and explain how to build a retirement plan.” | Expert simulations |

| Chain-of-Thought Prompt | “Explain your reasoning step by step before giving the final answer.” | Problem-solving, reasoning tasks |

(source: Google Cloud: Introduction to Prompting)

🧩 How to Write Effective Prompts

Here’s a simple framework to improve your prompting skills:

1. Be Clear and Specific

Avoid vague requests like “Write something about AI.”

Instead, specify your goal:

“Write a 200-word introduction explaining how AI is transforming healthcare.”

2. Provide Context

Give the AI background information or examples:

“You are a tech journalist writing for beginners. Explain AI prompting in simple terms.”

3. Define Output Format

Tell the AI how you want the response structured:

“List the top 5 benefits of AI in bullet points with short explanations.”

4. Use Role Assignments

Setting a role helps the AI adopt a persona:

“Act as a university professor and explain quantum computing to first-year students.”

5. Iterate and Refine

Don’t expect perfection on the first try.

Adjust your prompt based on the output — add constraints, examples, or tone instructions.

(source: Forbes: How to Write Amazing Generative AI Prompts)

🧠 Advanced Prompting Techniques

Once you’re comfortable with the basics, try these advanced methods:

🔁 Few-Shot Prompting

Provide examples to guide the AI’s response.

Example:

Q: What is the capital of France?

A: Paris.

Q: What is the capital of Japan?

A:

This helps the AI learn the pattern you expect.

🧩 Chain-of-Thought Prompting

Encourage the AI to explain its reasoning before giving an answer.

“Explain your reasoning step by step before solving the equation.”

🧱 Structured Prompts

Use headings, bullet points, or numbered steps to organize your request.

This helps the AI produce more readable and consistent results.

🧑🏫 Meta Prompting

Ask the AI to analyze your prompt and suggest improvements.

“Review this prompt and tell me how to make it more effective.”

💡 Examples of Effective Prompts

| Goal | Weak Prompt | Improved Prompt |

|---|---|---|

| Blog Writing | “Write about digital marketing.” | “Write a 500-word blog post explaining digital marketing trends for small businesses in 2025, with examples and actionable tips.” |

| Coding | “Write Python code.” | “Write a Python script that scrapes the top 5 trending YouTube videos and prints their titles.” |

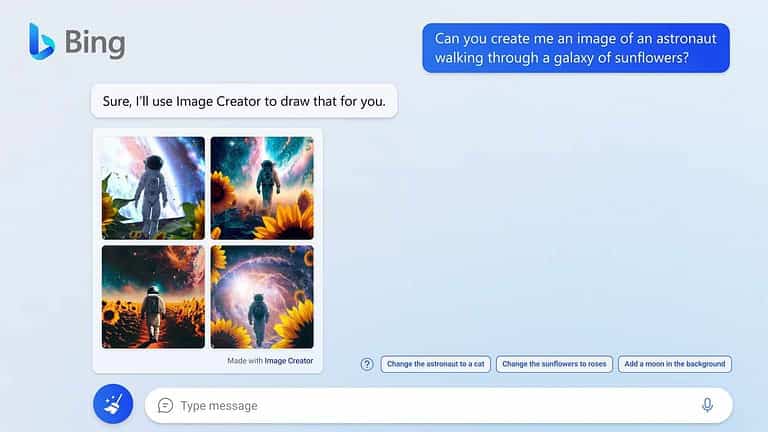

| Design | “Create an image.” | “Generate an image of a futuristic city skyline at sunset in cyberpunk style.” |

(source: Finest of the Fine: AI Prompts Guide)

🧭 Best Practices for Using AI Prompting

- ✅ Start simple, then add complexity.

- 🧩 Always define your goal, tone, and audience.

- 🔍 Review outputs critically — AI isn’t always accurate.

- 🗣️ Treat prompting as a dialogue, not a one-time command.

- 🧠 Keep learning — prompt engineering evolves quickly.

🚀 Why Prompting Matters

Mastering AI prompting turns you from a passive user into an AI power user.

It helps you:

- Save time and improve productivity

- Generate more accurate and creative results

- Communicate better with AI systems

- Unlock the full potential of generative models

(source: Learn Prompting)

🏁 Final Thoughts

Prompting is the foundation of effective generative AI use. Whether you’re writing, designing, coding, or analyzing data, strong prompt engineering skills can dramatically improve your results.

Start experimenting with different prompt styles today — and remember: the more specific and structured your prompt, the smarter your AI becomes.

Further Reading:

- Prompt Engineering Guide – IBM

- Learn Prompting: Free AI Prompting Course

- Google Cloud: Introduction to Prompt Design

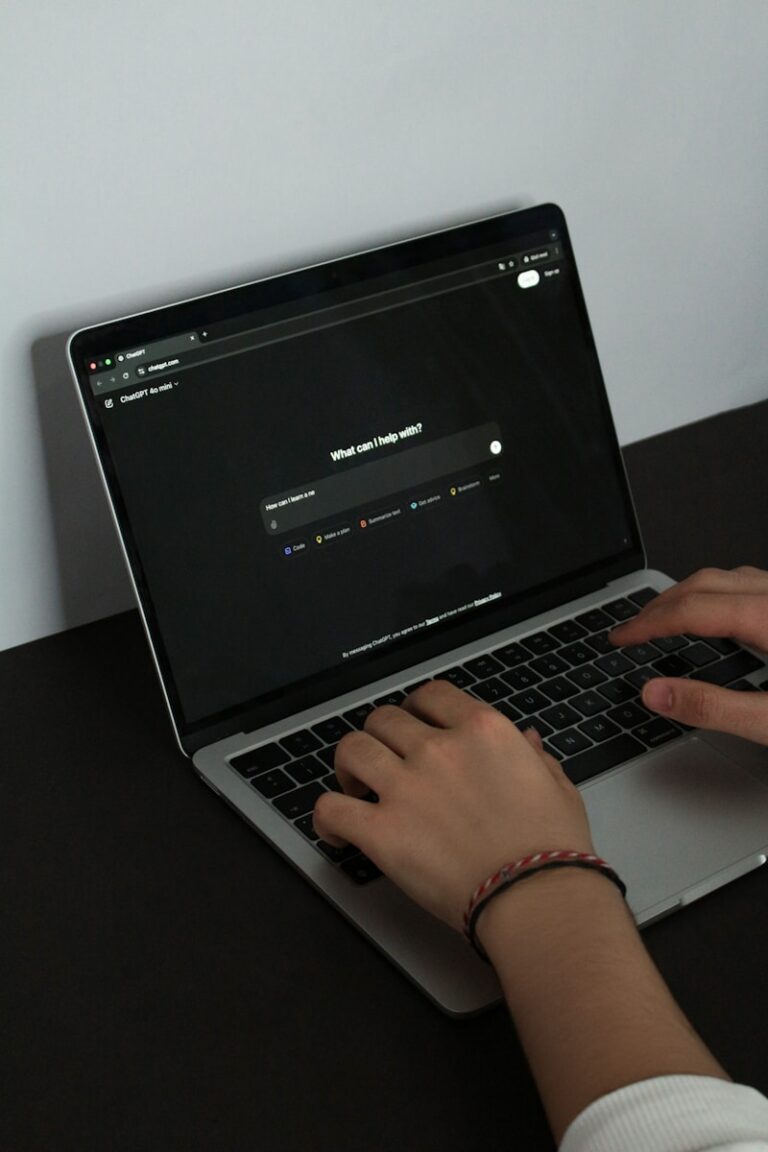

How to Search on ChatGPT

In ChatGPT Plus (GPT-4 with browsing), you can search the web by enabling “Browse with Bing” in the model selector. Then type your query naturally, and ChatGPT will fetch real-time information.

Key Takeaways

- Well-crafted prompts with clear instructions and relevant context produce better AI outputs.

- Effective prompt engineering combines technical understanding with creative approaches to guide AI behavior.

- Including examples in prompts helps AI models understand the desired format and style of response.

Understanding Generative AI

Generative AI represents a revolutionary approach to artificial intelligence that creates new content rather than simply analyzing existing data. These systems have transformed how we interact with technology and solve complex problems.

What Is Generative AI?

Generative AI refers to AI systems that can create new content such as text, images, music, or code based on the data they were trained on. Unlike traditional AI that focuses on classification or prediction tasks, generative AI creates original outputs that didn’t exist before.

At the heart of modern generative AI are large language models (LLMs) like GPT-4, PaLM, and Claude. These models contain billions or even trillions of parameters, allowing them to understand and generate human-like text.

These systems work by predicting what should come next in a sequence based on patterns learned during training. When a user provides a prompt, the AI analyzes it and generates a response that it believes best follows from that input.

Popular generative AI applications include:

- Text generation and summarization

- Image creation from text descriptions

- Code completion and generation

- Music composition

Key Principles of Generative Models

Generative models operate on several fundamental principles that enable their creative capabilities. The most important principle is pattern recognition – these models identify and learn complex patterns in their training data.

AI prompts serve as instructions that guide these models toward desired outputs. A well-crafted prompt effectively communicates what information is needed and in what format.

These systems also employ techniques like:

- Transfer learning – applying knowledge from one domain to another

- Few-shot learning – generating content after seeing just a few examples

- Reinforcement learning from human feedback – improving outputs based on human preferences

Most modern generative models use a transformer architecture, which allows them to process information in parallel and maintain context over long sequences of text. This architecture enables the models to understand relationships between words or concepts regardless of their distance within a prompt.

The Role of Prompt Engineering

Prompt engineering serves as the bridge between human intent and AI output, guiding generative AI systems toward producing desired results through carefully crafted instructions.

Defining Prompt Engineering

Prompt engineering is the art and science of designing and optimizing prompts to effectively communicate with AI models. It involves creating specific instructions that help large language models (LLMs) understand exactly what users want to achieve.

A prompt engineer crafts inputs using particular structures, phrasings, and formats to yield the desired output from AI models. This discipline requires understanding both the capabilities and limitations of the underlying AI technology.

Effective prompt engineering combines technical knowledge with creativity. Practitioners experiment with different approaches to find what works best for specific use cases.

The field has emerged as a specialized skill set as more organizations implement generative AI tools in their workflows.

Importance in Generative AI

Prompt engineering directly impacts the quality and usefulness of AI-generated content. Well-designed prompts can dramatically improve the relevance, accuracy, and appropriateness of AI outputs.

Without effective prompting, even the most sophisticated generative AI tools may produce irrelevant or incorrect results. The right prompt serves as a critical control mechanism for directing AI behavior.

Organizations increasingly recognize that prompt engineering skills are essential for maximizing their AI investments. A well-crafted prompt can:

- Reduce the number of iterations needed to get useful results

- Improve consistency across multiple AI-generated outputs

- Help avoid common AI pitfalls like hallucinations or off-topic responses

The process of crafting effective prompts enables direct interaction with AI using plain language, making advanced technology accessible to more users without requiring programming knowledge.

Designing Effective Prompts

Creating prompts that generate high-quality AI responses requires skill and practice. Well-crafted prompts can dramatically improve the usefulness of AI outputs and help users achieve their specific goals.

Basics of Effective Prompting

Good prompts start with clarity about what you want. Begin by defining your objective before writing your prompt. What specific outcome are you seeking? A blog post, code solution, or creative story?

Be specific and detailed in your instructions. Instead of asking “Write about dogs,” try “Write a 300-word guide about potty training Border Collie puppies for first-time dog owners.” The more precise your request, the better the AI can deliver what you need.

Use complete sentences and proper grammar. Effective prompts typically follow a pattern of instruction + context + examples. For instance:

- Instruction: “Write a product description for…”

- Context: “…a waterproof hiking backpack aimed at serious trekkers”

- Examples: “Include benefits like ‘stays dry in downpours’ and ‘protects electronics'”

Remember to include any formatting preferences or tone requirements. This helps the AI understand not just what to write, but how to write it.

Prompt Engineering Techniques

Prompt engineering involves strategically crafting instructions to optimize AI responses. One powerful technique is role prompting – asking the AI to adopt a specific persona. For example: “As an experienced pediatrician, explain sleep training methods.”

Chain-of-thought prompting asks the AI to break down complex problems into steps. This works well for math problems or logical reasoning tasks. For example: “Solve this problem step by step: If a shirt costs $25 after a 30% discount, what was the original price?”

Few-shot learning provides examples of desired outputs. For instance:

Convert these sentences to past tense:

1. I walk to the store → I walked to the store

2. She runs quickly → She ran quickly

3. They eat lunch →

AI prompt best practices also include iterative refinement. Start with a basic prompt, evaluate the response, then adjust for better results.

Avoiding Ambiguity in Prompts

Ambiguous prompts lead to unpredictable results. Use precise language and avoid vague terms like “good,” “better,” or “interesting.” Instead of “Write a good email,” try “Write a professional email requesting a deadline extension, using a respectful tone.”

Define key terms when needed. If asking for a “modern website design,” specify what “modern” means to you – perhaps “minimalist layout, sans-serif fonts, and ample white space.”

Beware of prompts with multiple interpretations. The request “Write about banks” could refer to financial institutions or river edges. Clarify with “Write about commercial banking institutions and their primary services.”

Set clear parameters around length, format, and audience. “Write a 500-word blog post about renewable energy for high school students” provides much-needed guardrails for the AI.

Providing Context in Prompts

Context helps AI understand the bigger picture. Explain relevant background information and why you need the content. “I’m creating educational materials for 3rd graders learning about photosynthesis. Write a simple explanation using analogies they would understand.”

Specify the intended audience. Content for industry experts should differ from general public explanations. “Write a technical explanation of blockchain for senior software developers” will yield different results than “Explain blockchain to elementary students.”

Include relevant constraints or requirements. For example, “Write a product description for an eco-friendly water bottle. The company values sustainability and targets outdoor enthusiasts. The description must emphasize recyclable materials and insulation properties.”

Guiding AI output with context leads to more tailored, useful responses that meet specific needs rather than generic information.

Setting the Scene for AI Interaction

Establish the right environment for productive AI collaboration. Begin by determining the appropriate tone and style. “Write in a conversational tone with occasional humor” guides the AI’s voice.

Create explicit boundaries around content. If certain topics should be avoided, state this clearly. “Write a children’s story about friendship without any references to danger, fear, or conflict.”

Use formatting instructions to shape the response structure. “Format the response as a bulleted list with bold headings for each main point” ensures readability.

The art of prompting involves thinking like a director – you’re setting up a scene and guiding the AI’s performance. Provide examples of desired outputs when possible to demonstrate exactly what you’re looking for.

When working on creative projects, consider giving the AI permission to be imaginative within parameters: “Create an original fantasy creature description using vivid sensory details while keeping it family-friendly.”

Specific Use Cases of AI Prompting

AI prompting helps users get more precise outputs from generative AI tools by giving clear instructions. These prompts can be tailored for different needs ranging from condensing information to creating new content.

Summarizing Text

Text summarization is one of the most practical applications of AI prompting. Users can guide generative AI tools to condense long articles, reports, or documents into shorter versions while keeping the key points intact.

For effective summarization prompts, specificity is crucial. For example:

- “Summarize this research paper in 3 bullet points focusing on methodology and results”

- “Create a 100-word executive summary of this quarterly report”

ChatGPT and similar tools respond well to format instructions that specify the desired length, style, and focus areas. This helps professionals save time when reviewing large volumes of text for meetings or research.

Many users find that adding parameters like “highlighting only contradictory findings” or “focus on statistical data” improves summary quality significantly.

Data Analysis and Interpretation

AI prompting excels at helping users make sense of complex data sets. By crafting careful input prompts, analysts can extract insights, identify patterns, and interpret results more efficiently.

Effective data analysis prompts typically include:

Structure specifications:

- “Organize these survey results into demographic categories”

- “Compare these quarterly sales figures and identify top 3 trends”

Visualization requests:

- “Describe how this data would look in a scatter plot”

- “Explain which graph type would best represent this relationship”

The AI analyzes patterns based on the information provided and generates interpretations that might be missed by human analysts. This capability is particularly valuable when working with large datasets or when looking for specific correlations.

Content Generation

Content creation is where generative AI truly shines. AI prompts for text generation can produce various types of content including blog posts, marketing copy, emails, and creative writing.

Effective content generation prompts should include:

- Target audience specification – “Write for small business owners with limited technical knowledge”

- Tone and style guidelines – “Use a professional but conversational tone”

- Format requirements – “Create a 5-point FAQ about cloud computing”

ChatGPT and similar models can generate first drafts that writers can then refine. This collaborative approach saves time while maintaining human creativity and oversight.

The quality of generated content directly correlates with prompt quality. Specific prompts that provide context, examples, and clear instructions consistently produce better results than vague requests.

Few-Shot Learning and Prompting

Few-shot prompting helps AI models complete tasks by showing them a few examples. This approach bridges the gap between zero instruction and extensive training, making it particularly effective for specialized tasks.

Understanding Few-Shot Learning

Few-shot learning refers to the ability of AI systems to understand new concepts from just a few examples. Unlike traditional machine learning that requires thousands of training examples, few-shot prompting enables large language models to learn from minimal demonstrations.

This technique works because modern LLMs have been pre-trained on vast amounts of data, giving them a foundation of knowledge. When you provide a few examples in your prompt, you’re not teaching the model new information—you’re showing it the specific pattern you want it to follow.

For instance, if you want a model to classify customer feedback, you might include:

- “Food was delicious!” → Positive

- “Waited 45 minutes for service” → Negative

The model then recognizes the pattern and can classify new statements accordingly.

Applying Few-Shot Techniques in Prompts

Creating effective few-shot prompts requires careful selection of examples. Your demonstrations should be:

Clear and consistent in format and style

Representative of the desired output

Diverse enough to show variations

To implement few-shot prompting, follow this structure:

- Begin with a clear instruction

- Provide 2-5 high-quality examples

- End with the new input that needs processing

Few-shot techniques are particularly valuable when working with specialized terminology or unique formatting requirements. They help the model understand context-specific rules without extensive explanation.

When crafting examples, arrange them from simple to complex when possible. This progression helps the model build understanding gradually, improving performance on more difficult cases.

Challenges and Considerations in Prompt Engineering

Prompt engineering presents several important hurdles that AI practitioners must navigate to achieve optimal results. These challenges range from technical difficulties in formulating effective prompts to ethical questions about bias and representation.

Identifying Common Challenges

One major challenge in prompt engineering is interpretability and explainability. When prompts become complex, the resulting AI outputs can be difficult for users to understand. This creates a communication gap between what users expect and what the AI delivers.

Data quality issues also frequently arise. Generative AI tools require substantial high-quality data to function properly. Without sufficient training data, models may produce inaccurate or biased outcomes.

Prompt precision presents another significant hurdle. Finding the right level of specificity is often a balancing act. Too vague, and the AI might miss the intended goal. Too specific, and it may become overly constrained.

The Chain-of-Thought technique helps address reasoning challenges, but implementing it effectively requires skill and practice.

Ethical Considerations

Bias remains one of the most pressing concerns in prompt engineering. AI systems can amplify existing social biases if prompts aren’t carefully designed. Practitioners must actively work to identify and mitigate these biases through thoughtful prompt construction.

Generative AI raises important ethical questions about content authenticity and intellectual property. When prompts lead to content that mimics existing works, copyright and attribution issues may arise.

Privacy considerations cannot be overlooked. Engineers must be cautious about prompts that might elicit personally identifiable information or sensitive data from AI systems.

Transparency represents another crucial ethical dimension. Users should understand when they’re interacting with AI-generated content and the limitations of these systems.

Accountability structures need development as prompt engineering evolves. Clear guidelines about who bears responsibility for AI outputs will help address potential harms.

Best Practices for Prompt Engineers

Effective prompt engineering requires both technical skill and creative thinking. The best prompt engineers focus on clarity, context, and continuous adaptation to get optimal results from AI systems.

Continual Learning and Update of Knowledge

Prompt engineers must commit to ongoing learning about AI capabilities and limitations. New AI models are released frequently, each with different strengths and response patterns.

One of the best ways to improve AI prompts is to understand how language learning models work. This knowledge helps engineers craft more effective instructions rather than overly detailed prompts.

Testing is crucial for prompt refinement. Engineers should:

- Run multiple variations of prompts

- Document which approaches work best

- Build a personal library of effective patterns

They should also stay current with prompt engineering strategies like task decomposition and providing reference texts when necessary. Breaking complex requests into simpler subtasks often yields better results.

Adapting Prompts to Intended Audience

The intended audience significantly impacts how prompts should be structured. Prompt engineers must consider who will interact with the AI system and tailor instructions accordingly.

For technical users, prompts can include specialized vocabulary and complex instructions. For general audiences, engineers should provide detailed context and simpler guidance.

Effective strategies include:

- Adjusting complexity based on user expertise

- Using domain-specific examples relevant to the audience

- Considering cultural and linguistic factors

Prompt engineers should clearly define objectives for each audience type. A marketing team might need creative outputs, while data analysts require factual precision from the same AI system.

Testing prompts with representative users from the intended audience provides valuable feedback for refinement and optimization.

Frequently Asked Questions

Effective prompting lies at the heart of successful generative AI use. Understanding key techniques and challenges can dramatically improve results when working with these powerful systems.

What are the best practices for constructing prompts in generative AI?

Creating effective prompts requires clarity and specificity. Users should state their desired output format explicitly and provide necessary context for the AI to understand the request properly.

Breaking complex requests into steps often yields better results than single complicated prompts. This guides users in interacting with generative AI systems effectively by framing their requests for optimal responses.

Including examples of desired outputs can help the AI understand expectations better. This technique, called few-shot prompting, gives the model reference points for style and content.

Can you provide examples of effective prompt templates for generative AI applications?

Role-based prompts help direct AI responses by assigning a specific persona. For instance: “Act as an expert historian and explain the causes of World War I in simple terms.”

Task-specific templates improve consistency. A basic structure might include: context + specific instruction + desired format + length requirements. This approach enables an AI tool to produce the desired output.

Content transformation prompts work well for reformatting information: “Convert this technical explanation into an FAQ suitable for beginners” or “Summarize this transcript into a list of key points.”

What role do foundation models play in responding to prompts within generative AI?

Foundation models serve as the underlying architecture for generative AI systems. They determine how well the system understands and responds to various prompting techniques.

Different foundation models have distinct strengths in processing certain types of prompts. Some excel at creative writing while others perform better with factual or technical content.

These large models contain embedded knowledge that can be accessed through careful prompting. Their training data influences how they interpret ambiguous requests.

How can one optimize prompts to improve the quality of outputs from a generative AI?

Iterative refinement helps optimize prompts substantially. Users should view prompting as a conversation, adjusting instructions based on initial outputs to get closer to desired results.

A simple 5-step method can turn AI confusion into clarity by asking good questions. This approach focuses on progressive improvement rather than expecting perfect results immediately.

Adding constraints helps control output quality. Specifying tone, length, audience level, and format provides the AI with clearer parameters for generating appropriate content.

In what ways can the context of a prompt affect the performance of a generative AI model?

Context length significantly impacts model comprehension. Providing sufficient background information helps the AI understand the subject matter properly, but excessive detail can dilute focus.

Cultural and domain-specific context affects interpretation. The same prompt may yield different results depending on whether the AI recognizes specialized terminology or cultural references.

Sequential prompting allows building contextual understanding across multiple interactions. Earlier prompts create a conversational framework that influences how later instructions are interpreted.

What are the limitations and challenges of prompt-based generation in AI systems?

Prompt sensitivity can lead to inconsistent outputs. Minor wording changes sometimes produce dramatically different results, making reliability challenging for critical applications.

Generative AI may produce plausible-sounding but factually incorrect information. This requires human verification, especially for factual or technical content.

Complex reasoning tasks often require sophisticated prompt engineering. Abstract concepts, multi-step logic, and nuanced understanding remain difficult even with effective AI prompts and careful guidance.