In a bold move that could reshape the digital landscape, OpenAI is reportedly developing its own social networking platform — a project that blends cutting-edge AI tools with the social media experience. Currently in its early prototype stage, this initiative signals OpenAI’s ambition to carve out a space in a domain long dominated by companies like Meta and Elon Musk’s X (formerly Twitter).

A New Kind of Social Network

Unlike traditional platforms that rely heavily on user-generated content and algorithmic feeds, OpenAI’s forthcoming social network appears designed from the ground up to integrate artificial intelligence at its core. Users will reportedly interact not just with each other but also with AI tools like ChatGPT and DALL·E to help generate text, images, and potentially entire posts.

This AI-powered content creation engine could enable a new kind of feed — one that’s not just social, but also deeply creative, interactive, and personalized. Think of a platform where instead of typing a status update or uploading a selfie, users could ask ChatGPT to co-author a story, create an image based on a mood, or remix an existing idea into something fresh.

Why Is OpenAI Doing This?

There are several layers to this move:

- Data and Feedback Loop: A social network naturally encourages constant interaction. This generates vast amounts of high-quality data — gold for training and refining AI models.

- Product Stickiness: A dedicated platform gives OpenAI a way to build ongoing engagement beyond standalone tools like ChatGPT. It keeps users inside the ecosystem longer, offering both entertainment and utility.

- Strategic Differentiation: While X focuses on real-time news and Meta pushes toward the metaverse, OpenAI is poised to offer a creative playground — a space where AI helps users express, imagine, and build ideas in real time.

Some insiders view this as a hedge against becoming just a backend provider for other platforms. By owning its own distribution layer, OpenAI gains control over how its technology is used and monetized.

Competing With the Giants

The timing is notable. X is battling declining trust, Meta is doubling down on Threads and AI-generated content, and TikTok’s algorithmic dominance has shifted the industry toward recommendation-first experiences. OpenAI, by contrast, could pioneer a hybrid model — one that blends creation, collaboration, and community, all infused with AI.

The concept could appeal to digital natives who are as comfortable interacting with bots as they are with other people. If done well, it might even set a new standard for what social platforms look like in the AI era.

What We Don’t Know Yet

While buzz is growing, many questions remain unanswered:

- What will the platform look like?

- Will it be text-based, image-focused, or something entirely new?

- Will it involve identity verification, or lean into anonymous creativity?

- How will moderation be handled, especially in a world of AI-generated content?

No release date has been announced, and OpenAI has not formally confirmed the project — though multiple sources say internal development is underway and being led by a specialized team.

The Bigger Picture

This project reflects a broader trend: the blending of AI with consumer-facing platforms. From Snapchat filters to AI-powered writing assistants, users are already engaging with AI in subtle ways. OpenAI’s social network could be the first to make those interactions central — not just enhancements, but the main event.

If successful, it would mark a significant step in OpenAI’s evolution from a research lab to a full-fledged tech platform with consumer ambitions. And in doing so, it may not just compete with the Twitters and Instagrams of the world — it might redefine what a social network can be.

How to Spot AI Images

AI-generated images are everywhere online these days. From social media profiles to news articles, artificial images have become harder to spot as the technology improves. You might have already seen countless AI images without even realizing it. The key to identifying AI-generated images is to look for specific details like unnatural hands with extra fingers, strange text, odd lighting, perfect symmetry, and background inconsistencies that still challenge even the most advanced AI tools.

As AI technology advances, telling the difference between real photographs and artificially generated images becomes more difficult each day. Many AI-created pictures now appear incredibly realistic at first glance. However, these images often contain subtle flaws that can help you determine if what you’re seeing is authentic or created by a computer program.

Learning to spot AI images isn’t just about satisfying curiosity—it’s becoming an essential skill in our digital world. With the rise of deepfakes and misleading content, being able to identify AI-generated images helps you avoid being fooled by fake content and make better judgments about what you see online.

As AI image generation technology rapidly advances, it’s becoming increasingly challenging to distinguish AI-generated images from real ones. However, there are still several telltale signs and methods you can use to help identify them:

I. Visual Clues & Inconsistencies:

- Hands and Limbs: This is often one of the biggest giveaways. AI frequently struggles with generating realistic hands, leading to:

- Extra or missing fingers/limbs.

- Fingers that are unnaturally long, short, fused, or distorted.

- Limbs that connect oddly to the body or have strange angles.

- This is especially apparent in images with multiple people or those in the background.

- Faces and Features: While AI is getting better at faces, look for:

- Uncanny Valley Effect: Faces that look almost real but have a subtly “off” or “soulless” quality.

- Symmetry Issues: While human faces aren’t perfectly symmetrical, AI can sometimes create an unnatural, overly symmetrical appearance or, conversely, highly distorted asymmetry.

- Eyes: Eyes might be overly shiny, blurry, hollow-looking, or have inconsistent reflections.

- Teeth: Teeth can appear too uniform, misaligned, or have an unnatural number or shape.

- Skin Texture: Skin can look overly smooth, waxy, or plasticky, lacking natural pores, blemishes, or fine lines.

- Hair:

- Inconsistent texture (some parts sharp, some blurry).

- Hair that seems to blend into the background or other elements.

- Unnaturally wispy or blurry edges.

- Text and Symbols: AI models often struggle with generating coherent and accurate text. Look for:

- Garbled, nonsensical, or misspelled words.

- Letters mashed together or with too much space.

- Unfamiliar characters or symbols.

- Misspellings on signs, clothing, or objects.

- Backgrounds and Objects:

- Distortions and Anomalies: Warped backgrounds, illogical perspectives, or strange objects.

- Repeating Patterns: AI can sometimes generate repetitive patterns in textures or backgrounds that don’t look natural.

- Inconsistent Lighting and Shadows: Shadows might fall in unnatural directions, or lighting on different elements within the image might not match.

- Functional Implausibilities: Objects or their interaction with people might be illogical (e.g., a person’s hand inside a hamburger, a tennis racket with slack strings).

- Lack of Context: AI models sometimes lack real-world understanding, leading to objects or situations that don’t make sense culturally or physically.

- Overall “Glossy” or “Rendered” Look: Many AI images have an unnaturally clean, polished, or overly “perfect” aesthetic that can feel a bit too much like a computer rendering rather than a photograph.

II. Technical & Verification Methods:

- Reverse Image Search: Upload the image to a reverse image search engine (like Google Images or TinEye). This can help you:

- Trace the image’s origin. If it only appears on social media or obscure sites without reputable sources, be cautious.

- See if the image has been used in other contexts or if similar images exist.

- Metadata Analysis: If possible, check the image’s metadata (information embedded in the file, like camera settings, date, and sometimes editing software). While AI tools can strip metadata, its absence or unusual entries can sometimes be a clue.

- AI Image Detection Tools: Several tools are designed to analyze images and estimate the likelihood of them being AI-generated. Some popular ones include:

- AI or Not

- Hugging Face AI Detector

- Illuminarty

- FotoForensics (uses Error Level Analysis – ELA)

- V7 Deepfake Detector

- Undetectable AI Image Detector

- Note: While these tools are improving, none are 100% foolproof, and their accuracy can vary.

- Zoom In and Inspect Details: Often, the subtle imperfections of AI generation become more apparent when you zoom in on areas that might seem insignificant at first glance.

- Consider the Source and Context:

- Where did you find the image? Is the source reputable?

- Does the image make extraordinary claims? If something seems too good or too shocking to be true, it might be.

- Does the image align with known facts or cultural norms? (As mentioned in your saved information, Microsoft is focusing on AI, and with new Bing updates, the spread of AI-generated content could become more prevalent, making source verification even more crucial.)

As AI technology continues to evolve, the ability to spot AI-generated images will become more challenging. A combination of careful visual inspection and the use of available tools and critical thinking is your best defense.

Key Takeaways

- Look for telltale signs like distorted hands, unrealistic textures, and background inconsistencies to identify AI-generated images.

- AI image detection skills are increasingly important as generative AI technology becomes more sophisticated and widespread.

- Regular practice and staying updated on the latest AI image generation techniques will improve your ability to distinguish between real and artificial content.

Understanding AI-Generated Images

AI art has evolved rapidly, creating images that can sometimes fool even careful observers. Looking at how these tools work helps us spot the differences between AI creations and authentic photographs.

Evolution of Image Generators

Early AI image generators produced obviously fake pictures with strange distortions. Today’s advanced tools like DALL-E and Midjourney create remarkably realistic images that can be hard to distinguish from photographs.

These modern systems work by learning patterns from millions of real images. When you request “a cat wearing a space helmet,” the AI combines features it has learned about cats and space helmets.

Each generation of AI improves significantly. The jump in quality from 2021 to 2023 was dramatic. Early limitations like strange hands with extra fingers are becoming less common, though still present in many AI creations.

Distinguishing AI Art from Real Photographs

AI-generated images often have telltale signs that reveal their digital origin. Look for unnaturally smooth or glossy skin textures that lack natural imperfections.

Pay attention to hands and eyes. AI frequently creates hands with the wrong number of fingers or oddly shaped digits. Eyes in AI images often appear too symmetrical or have identical reflections.

Backgrounds can provide clues too. AI images might show illogical architectural elements or physics-defying situations that wouldn’t exist in real photographs.

Text in AI images is usually garbled or nonsensical. When words appear on signs or clothing in AI art, they rarely form coherent sentences or readable words.

Technical Aspects of AI Art Generation

Understanding the underlying technology behind AI-generated images helps you identify them more effectively. AI systems use complex algorithms that leave distinct traces in their creations despite impressive capabilities.

How Generative AI Works

Generative AI systems like DALL-E, Midjourney, and Stable Diffusion create images through a process called diffusion. These models learn patterns from millions of images during training, then use that knowledge to create new visuals.

When you input a text prompt, the AI starts with random noise and gradually refines it into an image matching your description. This process involves:

- Neural networks that recognize and reproduce visual patterns

- Latent space mapping where the AI organizes visual concepts

- Diffusion models that transform noise into coherent images

The AI doesn’t truly “understand” what it’s creating. Instead, it produces statistical approximations of what it’s seen in training data. This limitation explains why AI often struggles with complex concepts like human hands or text.

Common Characteristics of AI-Generated Images

AI-generated artwork typically displays telltale signs that differentiate it from human-created art. These markers become easier to spot once you know what to look for.

Texture inconsistencies often appear in AI images. You might notice odd patterns in skin, fabric, or background elements that don’t follow physical rules.

Structural errors are common in AI art. Look for:

- Misaligned architectural features

- Anatomical impossibilities (extra fingers, misshapen ears)

- Objects that merge or blend incorrectly

Text and faces reveal AI limitations clearly. AI struggles to produce consistent lettering or coherent words, often creating gibberish text. Faces may look realistic at first glance but contain subtle asymmetries or unnatural feature combinations.

Details in transitions between objects often show problems. AI has difficulty maintaining logical consistency where elements meet or overlap.

Detecting AI-Generated Images

As AI technology advances, spotting computer-generated images has become increasingly challenging. The telltale signs are often subtle but recognizable once you know what to look for.

Tools and Techniques for Detection

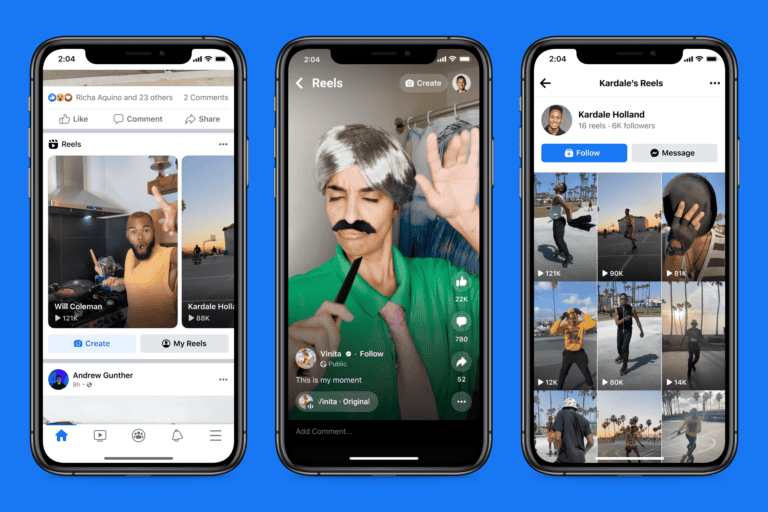

Several specialized tools can help you identify AI-generated images. AI or Not is a simple drag-and-drop tool that analyzes images and tells you if they were created by AI. Meta platforms like Facebook and Instagram have begun labeling AI-generated content to improve transparency.

When examining images yourself, focus on these key areas:

- Hands and fingers: AI often creates hands with too many or too few fingers

- Text elements: Look for garbled or nonsensical text within images

- Hair details: AI struggles with realistic hair strands and textures

- Facial asymmetry: Real faces are naturally asymmetrical, while AI tends to create overly symmetrical features

You can also look for inconsistent lighting and shadows, which AI systems frequently struggle to render realistically.

Role of Reverse Image Search

Reverse image search engines like Google Images and TinEye can help determine if an image is AI-generated. Upload the suspicious image to these services to see if it appears elsewhere online or if similar AI-generated images appear in the results.

This technique works especially well for:

- Finding the original version of a manipulated image

- Identifying stock images that may have been altered

- Discovering if an image has been used in multiple contexts

If a reverse search yields no results but the image looks professional, this could indicate it was AI-generated rather than photographed. Many AI images lack a digital footprint that would exist for real photographs shared online.

Understanding Deepfakes

Deepfakes represent a sophisticated form of AI-generated imagery that specifically mimics real people. These convincing fakes are created using neural networks that analyze existing videos and photos of a person to generate new, fabricated content.

Warning signs of deepfakes include:

- Unnatural blinking patterns or few blinks

- Skin texture inconsistencies between face and body

- Audio-visual misalignment when lips don’t match speech

- Strange head positions or movements

Female deepfake faces often follow specific patterns, appearing too cutesy with short, round faces, big eyes and plump lips. You can also look for the “digital makeup” effect, where facial features seem unnaturally perfect or glossy.

Implications of AI-Generated Content

AI-generated images raise serious concerns about truth and trust in digital media. The technology’s rapid advancement has made it increasingly difficult to distinguish between real and fake visual content.

AI in the Spread of Misinformation

AI tools can create convincing fake images that look authentic to the untrained eye. These images can be used to spread false narratives and manipulate public opinion. When AI-generated content appears credible, it can quickly go viral on social media platforms.

You should be especially cautious during election periods or crisis events when misinformation tends to spike. Bad actors may use AI images to create false evidence of events that never occurred or to manipulate contexts.

Organizations and individuals with malicious intent can create and distribute convincing fake images at unprecedented scale and speed. This makes fact-checking more challenging than ever before.

Learning to spot AI images helps you become part of the solution rather than unknowingly sharing misinformation with others.

Risks Associated with Deepfakes

Deepfakes represent a particularly dangerous form of AI-generated content. These are sophisticated fake videos or images where a person’s likeness is replaced with someone else’s, creating a false impression that someone did or said something they never did.

The potential for identity theft and fraud increases dramatically with deepfakes. Scammers can create convincing videos of public figures or even your friends and family to request money or personal information.

Corporate reputation and personal privacy face significant threats. Deepfakes can be used to:

- Impersonate CEOs giving false financial directives

- Create fake celebrity endorsements

- Generate compromising images of individuals

The psychological impact shouldn’t be underestimated. When you can’t trust what you see, it creates a general sense of skepticism that can undermine legitimate information.

Developing critical visual literacy has become essential in navigating this new reality of sophisticated digital deception.

Ethical Considerations and Best Practices

Using AI-generated images raises important ethical questions about authenticity and potential misuse. Understanding these considerations helps you navigate this technology responsibly while avoiding harmful practices.

Promoting Authenticity in AI Usage

When working with AI-generated images, always be transparent about their origin. Clearly label AI-created content to avoid misleading your audience. This builds trust and maintains integrity in your work.

Consider the ownership implications of the images you create. Many AI tools have specific terms regarding who owns the output and how it can be used commercially.

Be mindful of potential biases in your AI-generated images. Review all content for stereotypes or problematic representations before publishing or sharing.

Think about attribution. If your AI tool was trained on artists’ work, consider how you might acknowledge their influence or contribution to the final product.

Best practice tip: Create a standard disclaimer or watermark system for all your AI-generated content to maintain transparency.

Preventing Misuse of AI Tools

Establish clear boundaries for your AI image use. Avoid creating content that could spread misinformation or distort reality in harmful ways, such as deepfakes of real people without consent.

Implement strong data protection measures when using AI image generators. Many tools require uploads or access to information that should be handled securely.

Be aware of copyright concerns. Don’t use AI to recreate protected content or bypass licensing requirements for commercial projects.

Monitor how others might use your AI-generated content. Consider watermarking or other tracking methods to prevent your images from being repurposed for misleading content.

Key safeguards to implement:

- Regular ethics reviews of your AI image workflows

- Clear policies about acceptable AI image subjects and uses

- Ongoing education about emerging ethical concerns in AI technology

Frequently Asked Questions

AI images have become increasingly common online, but they often contain subtle signs that help identify them. Learning to spot these clues can help you distinguish between real and artificial content.

What are the common characteristics of images generated by AI?

AI-generated images often have a distinctive “too perfect” quality. They typically show unnaturally smooth textures and surfaces with a glossy appearance.

Many AI images have inconsistent lighting or shadows that don’t match the scene’s light source. This creates an unrealistic look that human photographers would naturally avoid.

Background elements may appear distorted or misshaped in AI images. Objects that should be familiar often contain subtle errors in their proportions or details.

How can one differentiate between AI-generated images and photographs taken by humans?

Look for unusual or inconsistent details in the image. AI often struggles with rendering complex patterns correctly, like text on clothing or symmetrical features like eyes.

Check for natural facial expressions and body movements. AI-generated people typically lack the subtle micro-expressions and natural postures that real humans display in photographs.

Examine the metadata and EXIF data of the image when possible. AI-generated images often lack camera details or contain unusual entries that wouldn’t appear in genuine photographs.

What techniques do AI image detection tools use to identify synthetic content?

Detection tools analyze pixel patterns and inconsistencies that human eyes might miss. These tools look for statistical anomalies in the image data that are typical of AI generation methods.

Many detection systems examine the noise pattern of an image. AI-generated images often have different noise signatures compared to photographs captured by real cameras.

Advanced detection tools check for features like facial symmetry issues, unnatural skin textures, and irregular background elements that commonly appear in AI-created images.

What are the typical artifacts or flaws to look for in an image that may indicate it was produced by an AI?

Watch for issues with hands and fingers, which AI often renders incorrectly. Count the fingers—AI frequently creates hands with too many or too few digits.

Look for distortions in reflections and transparent surfaces. AI systems typically struggle to create accurate reflections in mirrors, water, or glass.

Check for errors in fine details like jewelry, text on clothing, or patterns on fabrics. These small elements often reveal AI’s limitations in rendering complex or small-scale features.

Are there any reliable methods for detecting AI alterations in digital media?

Verification tools designed specifically for detecting AI imagery are increasingly available online. These specialized services compare suspicious images against known AI generation patterns.

Cross-reference suspicious images through reverse image searches. This helps you find the original image if an AI has altered an existing photograph rather than created one from scratch.

Consulting multiple detection methods improves accuracy. No single technique is perfect, so using a combination of tools and manual inspection gives you the best chance of identifying AI content.

Can the realism of AI-generated images be a reliable indicator of their authenticity?

Extreme perfection in an image should raise suspicion. Real photographs contain natural imperfections, while AI images often appear unnaturally flawless.

Backgrounds in AI images frequently lack the natural depth and detail found in real photographs. Pay attention to how well the background elements align with the foreground subjects.

Even highly realistic AI images typically contain subtle inconsistencies somewhere. The more elements in the image, the more likely the AI will make mistakes in creating a physically accurate scene.