AI video generation has rapidly evolved from short, surreal clips to cinematic, story-driven content. Among the leaders in this space is Google DeepMind’s Veo 3, the latest iteration in the Veo series. Positioned as a high-end, production-capable video model, Veo 3 competes directly with models like OpenAI’s Sora, Runway’s Gen-3, and Pika.

In this article, we’ll explore what Veo 3 offers, how it compares to Sora, and where each model stands in the evolving AI video landscape.

What Is Veo 3?

Veo 3 is Google DeepMind’s advanced text-to-video (and multimodal) generation model. It builds on earlier versions of Veo, improving:

- Visual realism

- Prompt accuracy

- Scene consistency

- Camera motion control

- Longer narrative coherence

Veo 3 is designed for high-resolution, cinematic-quality video generation, targeting filmmakers, advertisers, content creators, and studios.

Key Capabilities

- High-definition video output (up to 1080p and beyond in some workflows)

- Longer clip durations compared to early-generation models

- Advanced physics and motion realism

- Improved character and object consistency

- Detailed camera control (pan, tilt, zoom, tracking shots)

- Style transfer and cinematic control (lighting, lens types, mood)

Veo 3 emphasizes professional-grade video realism and storytelling.

What Is Sora?

Sora, developed by OpenAI, is one of the most widely recognized AI video models. It gained attention for generating highly realistic, minute-long video clips with strong scene continuity and physics simulation.

Sora’s strengths include:

- Strong understanding of complex prompts

- Realistic motion and environmental physics

- Consistent characters across scenes

- Impressive world modeling capabilities

- Creative scene composition

Sora focuses heavily on world simulation, making its outputs feel physically grounded and narratively coherent.

Veo 3 vs. Sora: Head-to-Head Comparison

1. Video Realism

Veo 3

- Highly cinematic

- Strong lighting and lens simulation

- Designed for polished, production-ready visuals

Sora

- Extremely strong environmental realism

- Natural motion and physics modeling

- Highly believable dynamic scenes

Verdict: Both models are top-tier in realism. Sora often stands out in dynamic world simulation, while Veo 3 emphasizes cinematic polish and controllability.

2. Prompt Understanding

Veo 3

- Strong at interpreting filmmaking-style prompts

- Good at structured scenes and stylistic requests

Sora

- Excels at complex, layered prompts

- Handles abstract and imaginative instructions well

Verdict: Sora may have a slight edge in handling highly complex narrative prompts, while Veo 3 performs exceptionally well in structured, cinematic scenarios.

3. Clip Length & Narrative Coherence

Both models improved significantly over early AI video tools.

- Veo 3 supports longer, coherent scenes with better character continuity.

- Sora was designed with long-form video in mind and handles multi-scene storytelling effectively.

Verdict: Comparable, though Sora initially set the benchmark for longer coherent generation.

4. Control & Customization

Veo 3 Strengths:

- Camera motion controls

- Style-specific generation

- Cinematic direction (shot types, mood)

- Integration potential with Google’s creative ecosystem

Sora Strengths:

- Strong narrative scene construction

- World consistency

- Natural object interactions

Verdict: Veo 3 leans more toward filmmaker-style control. Sora leans toward world simulation and emergent realism.

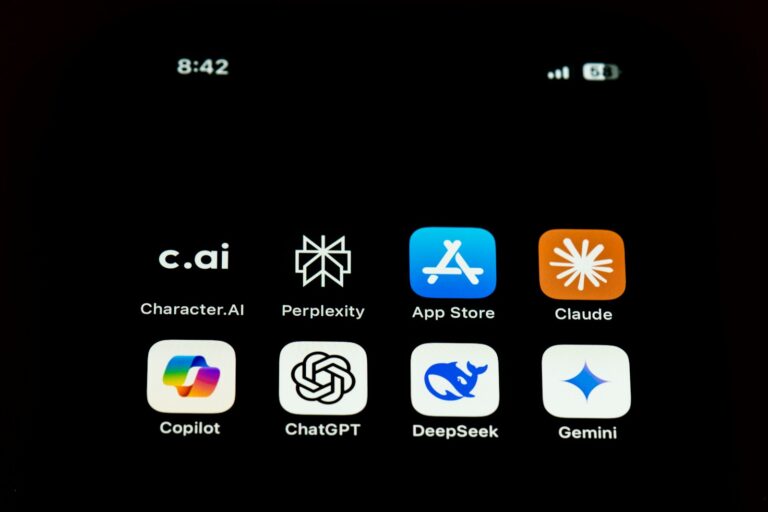

5. Ecosystem & Access

Veo 3

- Tied to Google DeepMind and Google Cloud

- Often integrated into creative and enterprise tools

- May be more enterprise-focused depending on release structure

Sora

- Developed by OpenAI

- Likely integrated into ChatGPT and creative workflows

- Strong developer and API ecosystem

Verdict: Access models may differ. Sora benefits from OpenAI’s broad developer reach, while Veo 3 may be deeply integrated into Google’s AI and creative platforms.

How Veo 3 Compares to Other Competitors

Beyond Sora, other notable players include:

Runway Gen-3

- Popular among creators

- Strong editing and inpainting features

- Real-time workflow tools

Pika

- User-friendly

- Focused on social content creators

- Quick generation cycles

Compared to these:

- Veo 3 and Sora operate at a higher realism and simulation level.

- Runway and Pika often prioritize accessibility and speed.

- Veo 3 and Sora aim more at cinematic or enterprise-grade output.

Strengths and Weaknesses

Veo 3 Strengths

- Cinematic control

- High visual fidelity

- Strong camera direction capabilities

- Enterprise and studio appeal

Veo 3 Limitations

- May require more structured prompting

- Access could be limited depending on rollout

Sora Strengths

- Advanced world modeling

- Realistic physics and motion

- Strong narrative coherence

- Broad creative flexibility

Sora Limitations

- May have tighter access controls

- Computationally intensive for long scenes

Which One Is Better?

It depends on your use case.

- 🎬 Filmmakers and advertisers may prefer Veo 3 for cinematic control.

- 🌍 Story-driven creators and world-builders may favor Sora.

- 🛠️ Developers and startups may choose based on API access and ecosystem integration.

- 📱 Social creators might lean toward more accessible tools like Runway or Pika.

There isn’t a single “winner.” Instead, we’re seeing specialization:

- Sora = advanced world simulation

- Veo 3 = cinematic precision and production polish

The Bigger Picture: The Future of AI Video

The competition between Veo 3, Sora, and other models signals a larger shift:

- AI video is moving toward full short-film generation.

- Physics realism and character consistency are improving rapidly.

- Professional filmmaking workflows are becoming AI-augmented.

- Creative barriers to entry are dropping dramatically.

We’re entering an era where high-quality video production no longer requires massive budgets — just powerful models and strong creative direction.

Final Thoughts

Veo 3 represents Google DeepMind’s push into high-end cinematic AI video generation, directly competing with OpenAI’s Sora. While both models are remarkably capable, their strengths differ slightly:

- Sora excels in realistic world simulation and long-form coherence.

- Veo 3 shines in cinematic control and production-level polish.

As these systems continue to evolve, the real winner may not be one model over another — but the creators who learn how to use them effectively.

The AI video revolution is just getting started. 🎥✨